Learning to Represent Haptic Feedback for Partially-Observable Tasks

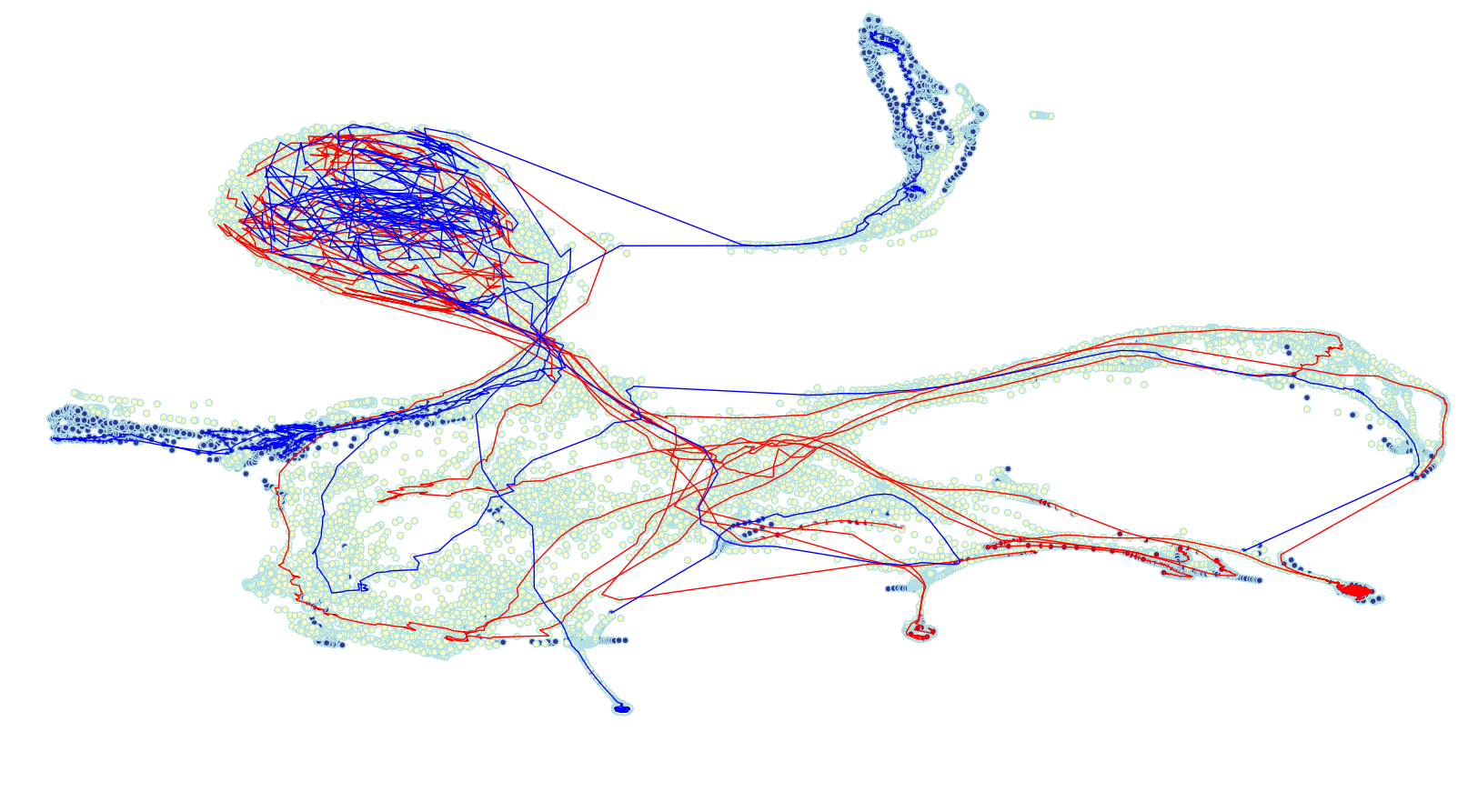

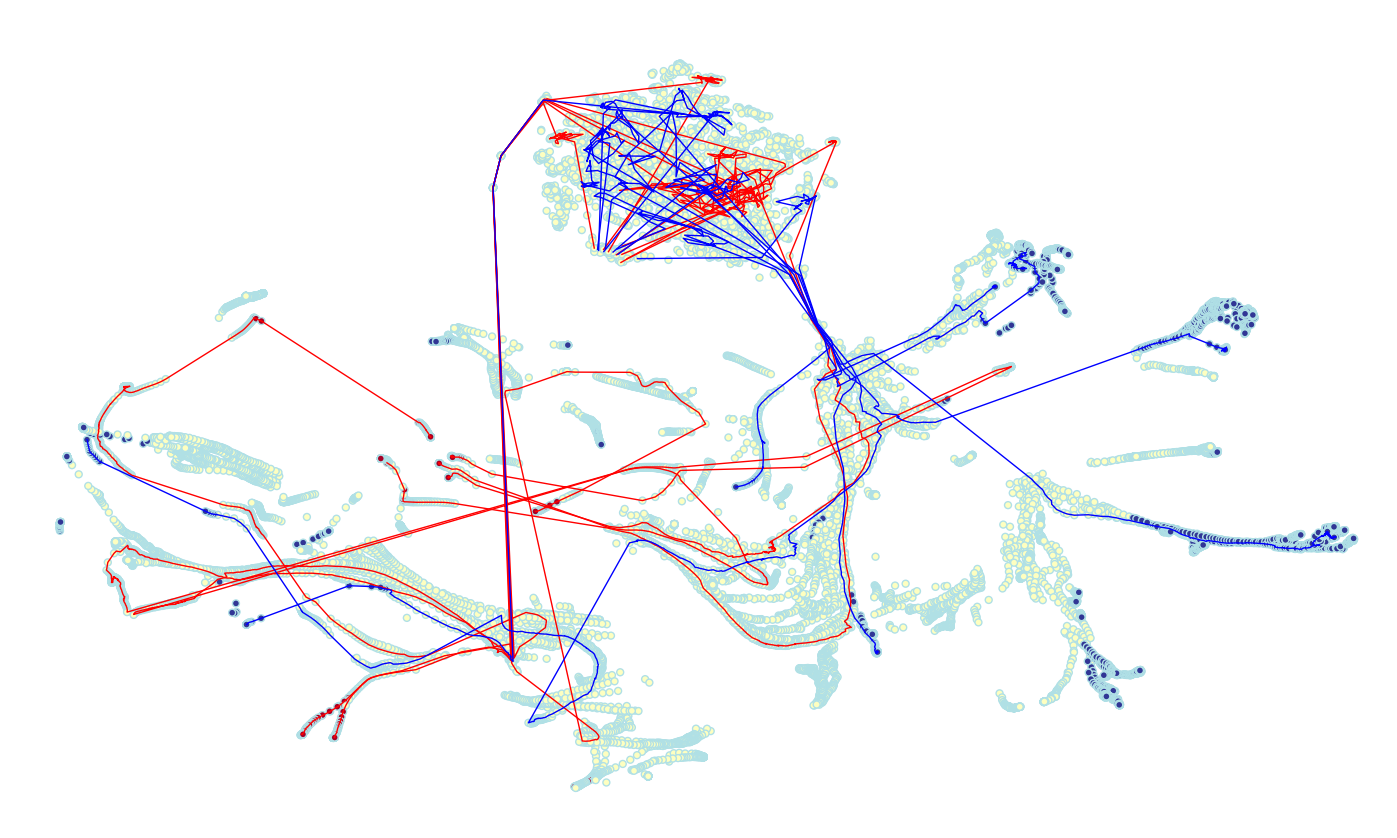

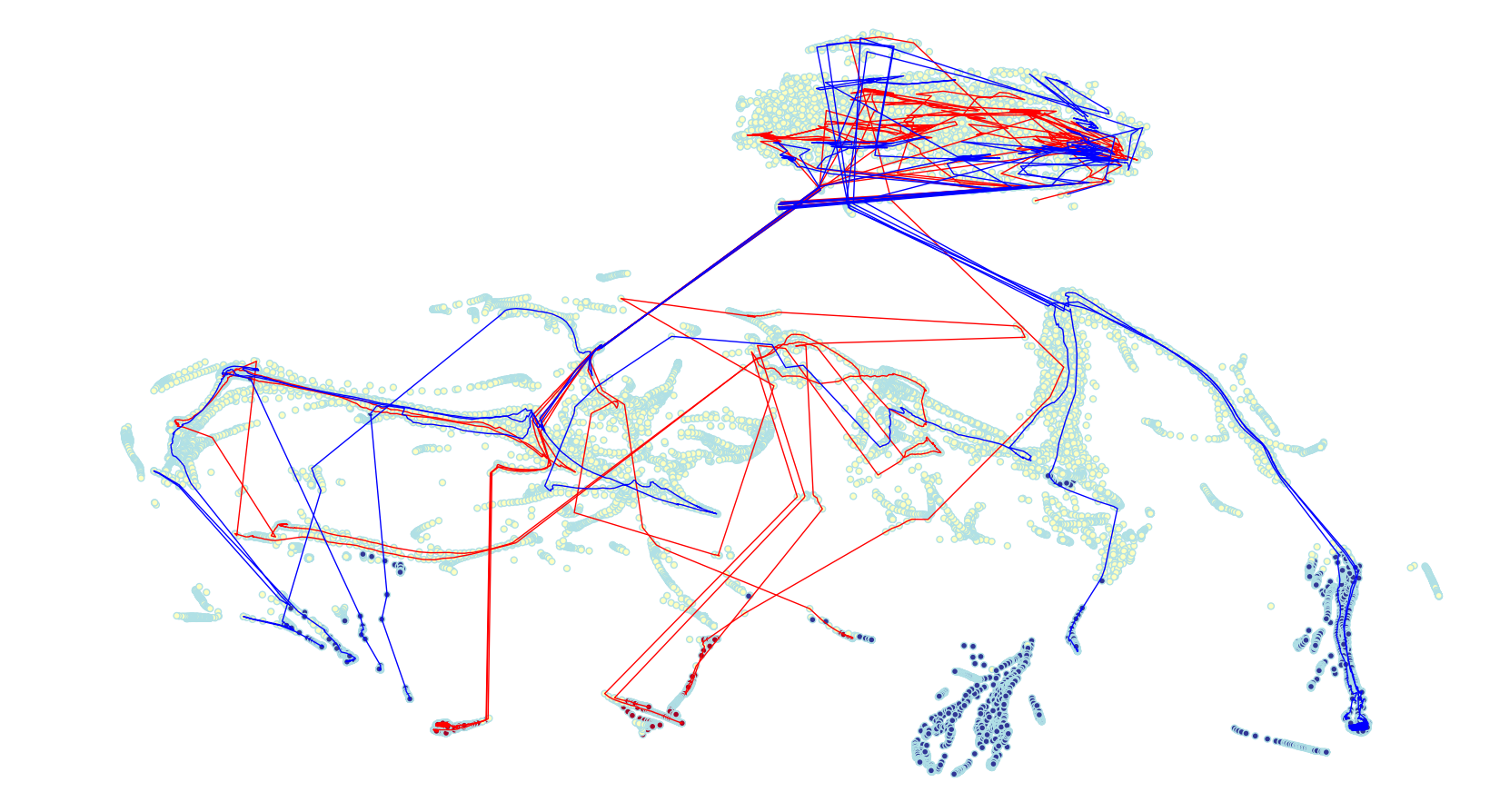

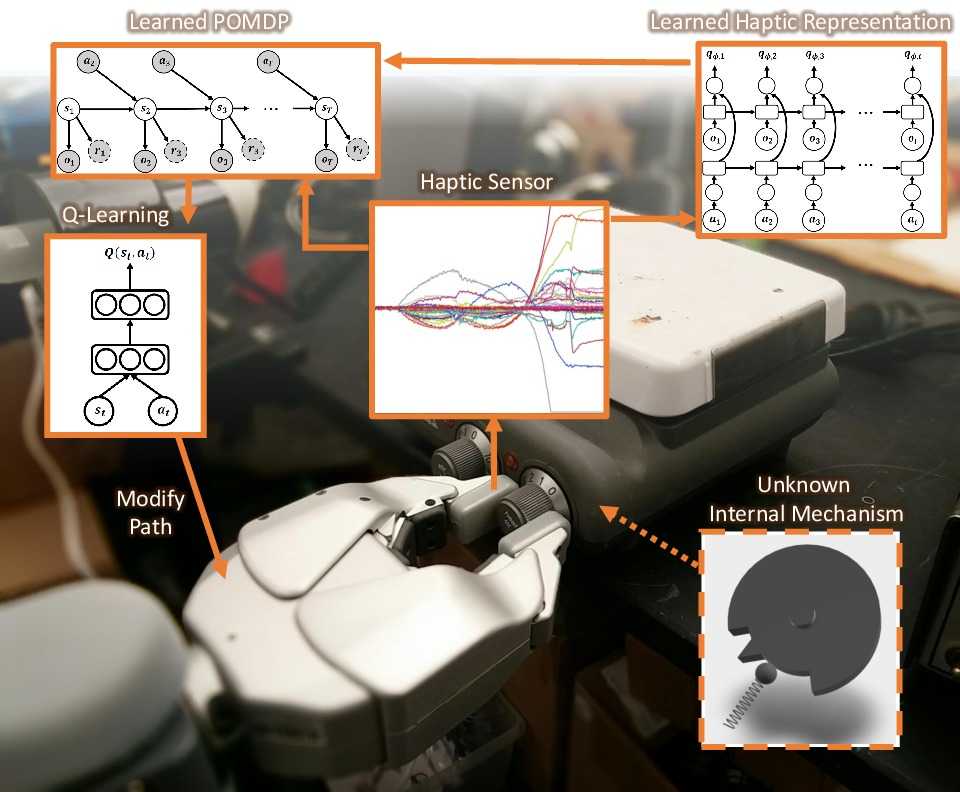

The sense of touch, being the earliest sensory system to develop in a human body [1], plays a critical part of our daily interaction with the environment. In order to successfully complete a task, many manipulation interactions require incorporating haptic feedback. However, manually designing a feedback mechanism can be extremely challenging. In this work, we consider manipulation tasks that need to incorporate tactile sensor feedback in order to modify a provided nominal plan. To incorporate partial observation, we present a new framework that models the task as a partially observable Markov decision process (POMDP) and learns an appropriate representation of haptic feedback which can serve as the state for a POMDP model. The model, that is parametrized by deep recurrent neural networks, utilizes variational Bayes methods to optimize the approximate posterior. Finally, we build on deep Q-learning to be able to select the optimal action in each state without access to a simulator. We test our model on a PR2 robot for multiple tasks of turning a knob until it clicks.